Performing Bias: Thoughts about Curating Machine Vision Datasets

Linda Maria Jessica KronmanJanuary 30, 2023 | Reflections

Data must be classified in some way to become useful, as Data Feminism authors Lauren Klein and Catherine D’Ignazio discuss. Yet, how we classify matters and the choices of what counts results in biased datasets. In machine learning, the worldviews embedded in training datasets shape how machines perceive the world; and the way humans classify images for datasets in turn affects how machines classify humans. If we then ask when bias is introduced into machine vision, one answer is when datasets are curated. In the field of computer vision, data curation typically involves what Kate Crawford describes in Atlas of AI as ‘mass data extraction without consent and labeling by underpaid crowdworkers.’

My PhD research investigates how the relationship between bias and machine vision is conceptualized in digital art. The past years I have engaged with questions of machine vision bias through two projects and shifted positions from that of a dataset curator to a critique of dataset curation. The first one, titled ‘Database of Machine Vision in Art, Games and Narratives‘ (hereafter Machine Vision Database), is part of a larger digital humanities project Machine Vision in Everyday Life at the University of Bergen in Norway, which explores how machine vision affects us and how we understand ourselves as a society and as individuals. By analyzing art, games, narratives and machine vision apps in social media, the project addresses questions of agency, malleability, objectivity, values and biases in machine vision.

The second project is an artwork called Suspicious Behavior, a speculative annotation tutorial I co-created with Andreas Zingerle as the artist duo KairUs. The artwork addresses the annotation apparatus and the layers of labor typically involved in curating datasets for machine vision. As different as these two projects seem at first glance, both have provided critical insights on the challenges of data curation practices; while shifting positions in the process has informed my take on machine vision and the importance of often undervalued practices of creating datasets in machine learning. Addressing these challenges – which arise when collecting, classifying and cleaning data – is fundamental to developing better and fairer machine vision.

Machine Vision Database – Curating a Dataset

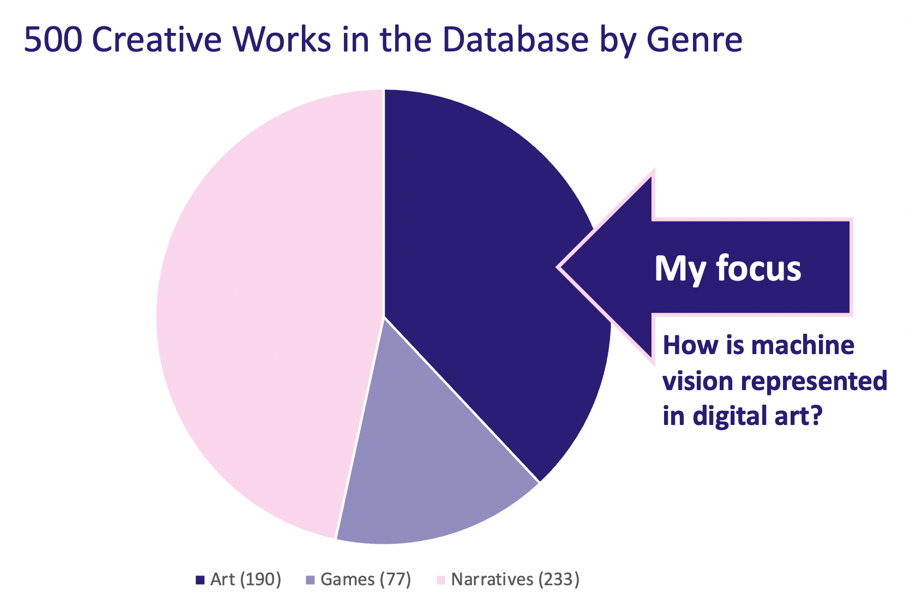

The Machine Vision Database is a collection of 500 creative works – artworks, games and narratives – that reference to or use machine vision. Overall, the project comprised a tripartite process of creating a relational database, exporting datasets from the database, and using it for data visualizations, which required our team to make multiple design decisions at various stages. Regarding data selection, for example, we discussed which aspects of the creative works to include or dismiss, or how to define machine vision in the first place. We decided on categories and developed workflows to classify works. My contribution also involved selecting, interpreting, and logging artworks for the database.

Figure depicting the distribution of creative works in the Machine Vision Database.

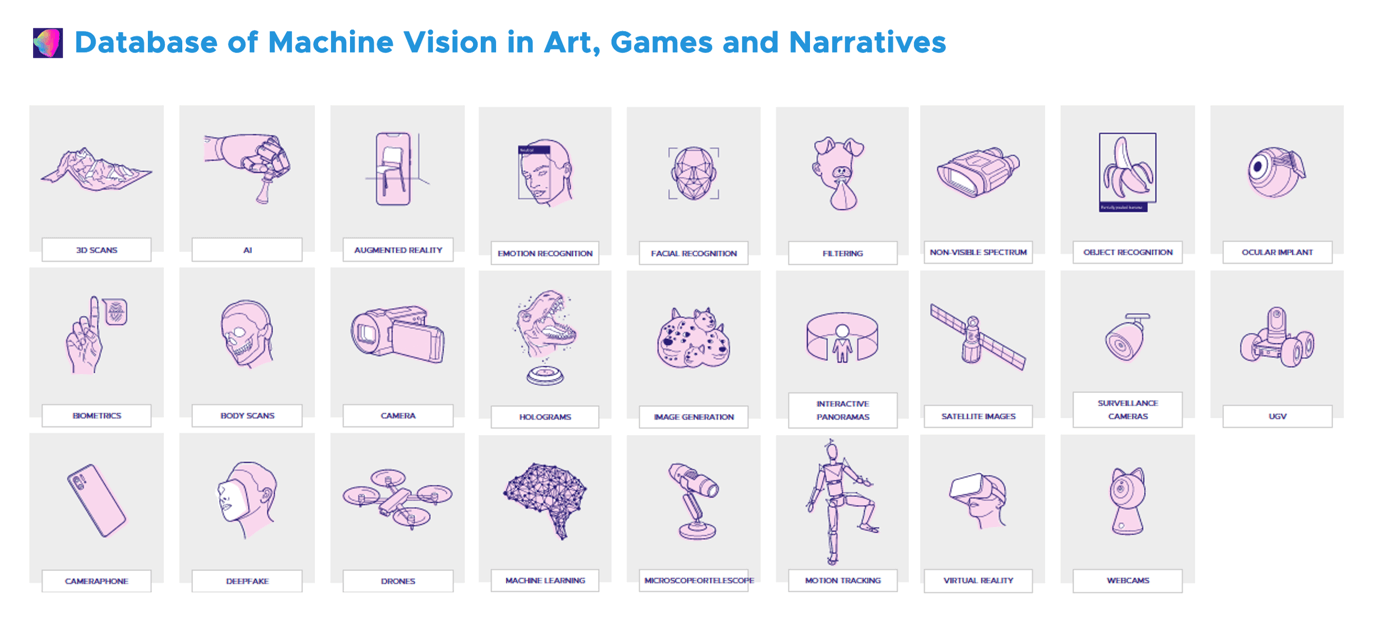

We developed the concept of ‘Machine Vision Situations’ (hereafter Situations) to collect data about interactions between agents. For analyzing and logging creative works into the database, in Situations we answered the question ”who does what?” with descriptive verbs. For example, in a situation linked to Suspicious Behavior, the character ‘Annotator’ is ‘labeling’, ‘classifying’, ‘interpreting’, ‘deciding’ and ‘guided’. In the same situation, machine learning and surveillance camera technologies are ‘trained’, ‘detecting’, ‘analyzing’, ‘developed’, ‘classifying’ and the entity ‘Image’ is ‘labeled’, ‘interpreted’ and ‘classified’. While a Situation had to include at least one of the 26 machine vision technologies we defined, we could also describe the actions of ‘Characters’ with additional attributes (species, gender, race or ethnicity, age, sexuality) or ‘Entities’ without applicable attributes.

The 26 machine vision technologies we defined for the database. Illustrations © Machine VIsion in Everyday Life -project.

Designing a taxonomy for annotating creative works required making multiple decisions – in other words, performing bias. In my case, creating data required reducing rich artworks into keywords describing agents and their actions. What felt particularly challenging was to classify characters. Assigning gender, age, race or ethnicity to fictive figures or personas was in many ways problematic. At the same time, it forced us to return and rethink the notions of classes and attributes. Often, I simply wished I could externalize this component of classification to machine classifiers. Yet, the extensive task of logging artworks into the database was simultaneously helping me understand that machine vision trained to classify humans behaved in troubling ways.

My analysis of artworks or ‘artistic audits’ as I call it, eventually revealed that the lack of diversity in datasets resulted in misgendering machines, that binary gendering leads to stereotypical classification, and that machine vision continues to perpetuate the Western colonial gaze. I believe machine vision should be understood as Intuition Machines because datasets embed world views. Like human intuitions, harmful bias in machine vision leads to discrimination; and artworks are capable of showcasing how machine vision particularly discriminates those already marginalized in society.

Machine Vision Datasets – Scrutinizing Images and Their Labels

Artistic research that critically examines visual datasets has pushed the Computer Vision community to revisit several influential datasets. Among widely-circulated examples are Trevor Paglen’s and Kate Crawford’s artwork ImageNet Roulette and their accompanying essay excavating.ai, which highlighted the problematic labeling of humans in multiple datasets. Artists Adam Harvey and Jules LaPlace’s project exposing.ai, on the other hand, brought to attention how images in facial datasets are used without consent. To a great extent, such critiques have been directed to the content of visual datasets and their biased labeling, or how images are classified into arbitrary categories. Therefore, the responses to ‘fix’ mainly involved taking corrective measures on the problematic content of the datasets in question. Corrective practices such as increasing diversity by balancing demographics, erasing problematic categories, or removing publicly available datasets are sensible approaches, but address only part of the problem. Biases embedded in datasets already propagate through pre-trained models.Both data curators and artists exhibiting content from datasets must engage with ethical questions about consent and reflect critically on how their work might inflict ‘data violence’. Many of these questions resonate with critical archival studies that can be helpful for both data curators and artists in the emerging field of critical dataset studies. When creating the artwork Suspicious Behavior, we used material from datasets assembled to detect anomaly behavior in the domain of video surveillance; and had to critically reflect on how we engage with these visual datasets.

Suspicious Behavior © esc medien kunst labor, CYBORG-SUBJECTS exhibition. Photo: Martin Gross.

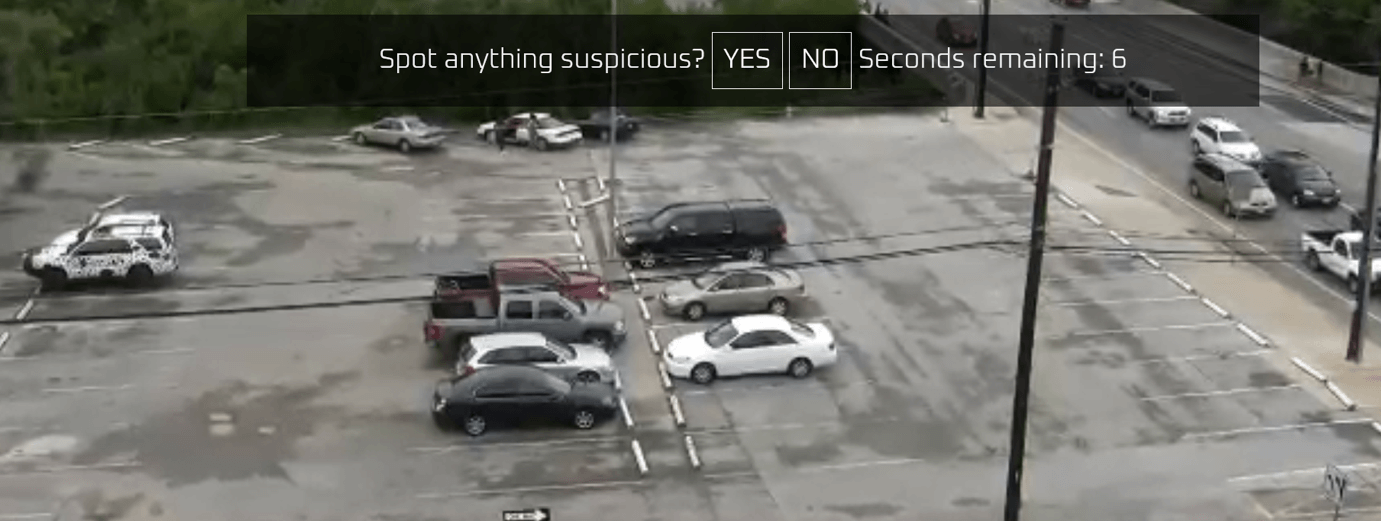

Suspicious Behavior – Critique of Curating Datasets

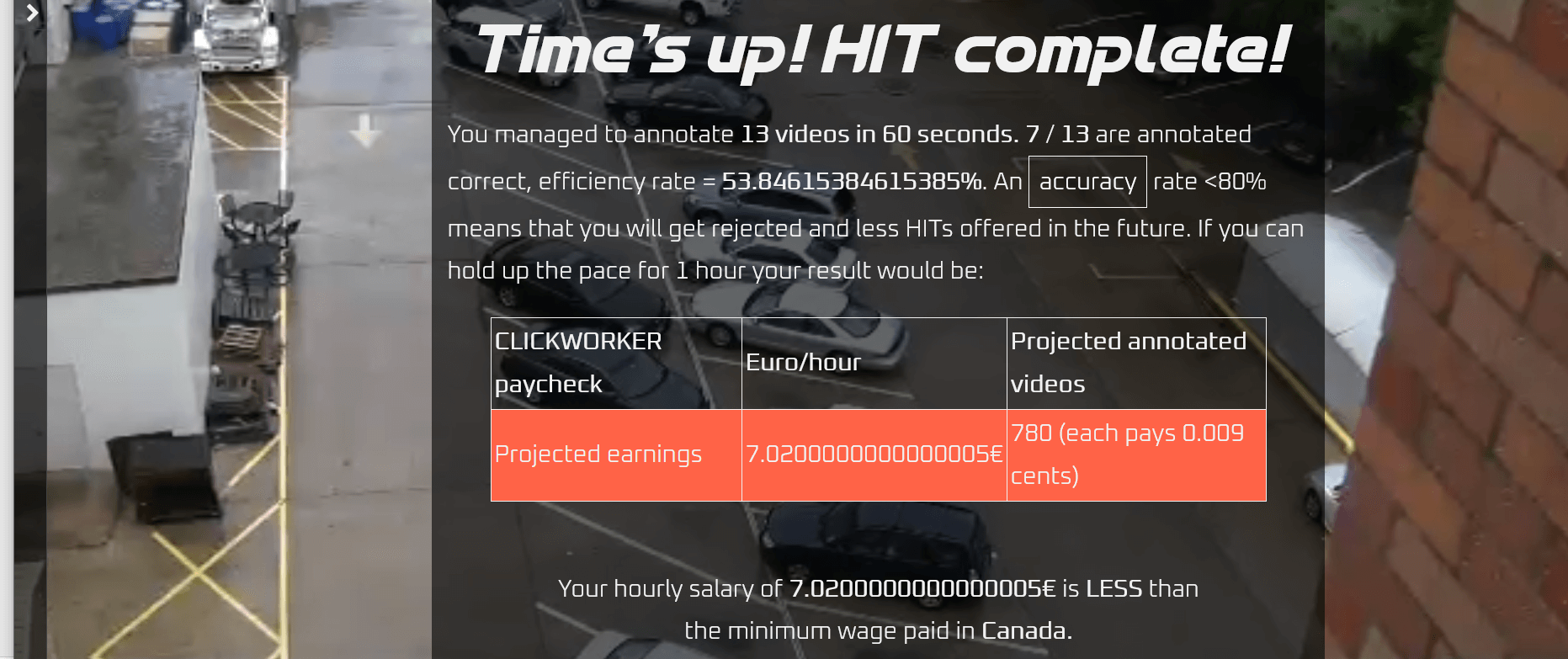

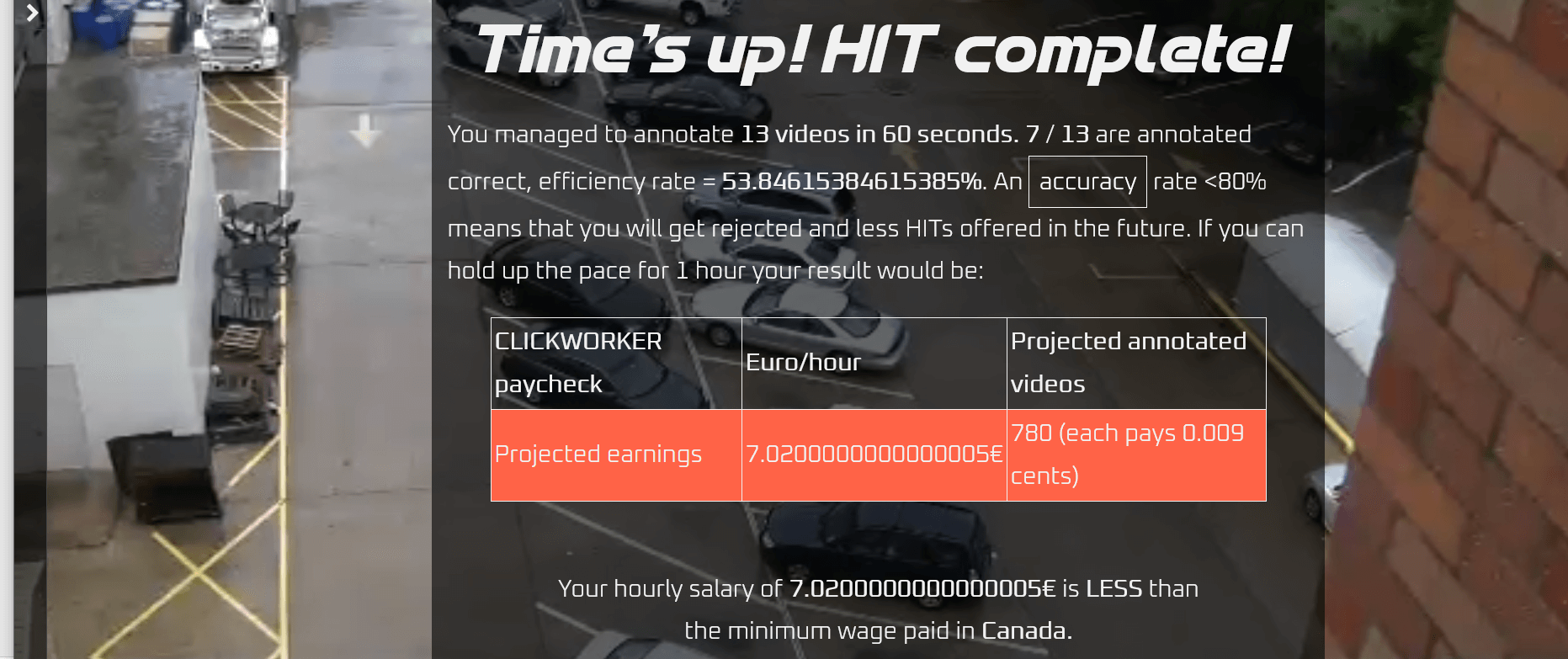

Suspicious Behavior is an artwork that invites readers to critically examine machine learning datasets from the perspective of an annotator, whose task is to attach labels to images. Artistic research for the project required a deep dive into the annotation practices of visual datasets. For the tutorial, we developed an interface mimicking designs that are used to facilitate speed and accuracy of outsourced annotators. Our speculative interface asked the annotator to spot suspicious behavior in 10 second long video segments. One of the speculative training modules gives the user 60 seconds to accurately annotate as many videos as possible. The test ends with a report declaring whether the result qualifies the trainee for the job. It becomes clear that to earn a living wage, the annotator can afford to only glance at images. Where ‘the “glance” is the norm,’ as Nicolas Malevé suggests, an annotation apparatus does not allow the questioning of how categories are made, or how classes are defined. Addressing this, Suspicious Behavior became an exercise in understanding when bias is introduced into machine vision: A way to examine the interplay between data curators and annotators, and the circumstances in which labels are attached to images.

The annotation interface in Suspicious Behavior. Screenshot by KairUs.

Annotation report in Suspicious Behavior. Screenshot by KairUs.

Annotation report in Suspicious Behavior. Screenshot by KairUs.

Annotation report in Suspicious Behavior. Screenshot by KairUs.

Annotation report in Suspicious Behavior. Screenshot by KairUs.My takeaway from both projects is that we need to critically examine the content of datasets to understand machine vision bias, yet even more importantly, we need to critically reflect on the processes of performing bias when we create datasets, as a nonbiased dataset can’t exist. As computer vision datasets scale up from millions to billions of images, dataset curation requires increased automatization and classification becomes all the more externalized to machines. Yet, choices are still made, requiring us to perform bias. Those choices are more about operations and processes than categories and labels. It is thus equally important that we understand and reflect on the practices of curating datasets.

Linda Kronman is a media artist, designer, and is currently completing a PhD at the University of Bergen, researching how machine vision is represented in digital art as a part of the Machine Vision in Everyday Life project. Kronman is a member of the artist duo KairUs, where she creates art together with Andreas Zingerle, exploring topics such as surveillance, smart cities, IoT, cybercrime, online fraud, electronic waste and machine vision. KairUs has recently been recognized by the Austrian Federal Ministry of Arts and Culture, Civil Service and Sport with the BMKÖS/Mayer Outstanding Artist Award 2022 in the category of Media Art. This research was funded by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (grant agreement No 771800).

Linda Kronman is a media artist, designer, and is currently completing a PhD at the University of Bergen, researching how machine vision is represented in digital art as a part of the Machine Vision in Everyday Life project. Kronman is a member of the artist duo KairUs, where she creates art together with Andreas Zingerle, exploring topics such as surveillance, smart cities, IoT, cybercrime, online fraud, electronic waste and machine vision. KairUs has recently been recognized by the Austrian Federal Ministry of Arts and Culture, Civil Service and Sport with the BMKÖS/Mayer Outstanding Artist Award 2022 in the category of Media Art. This research was funded by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (grant agreement No 771800).

Published: 02/23/2023